A while back Microsoft published their “Annual Work Trend Index” which, I guess, is a thing Microsoft does annually? It was my first time hearing about it.

Anyway, this is a report where Microsoft projects how they think the workforce will evolve in the coming years. It’s worth paying attention to because, I don’t know if you’ve worked recently, but most businesses run or at least utilize Microsoft Products. Their vision for the future will be highly influential.

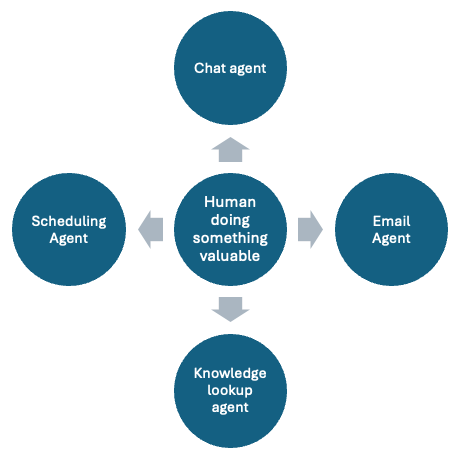

This graphic demonstrates how they think teams will evolve as AI adoption increases:

To summarize:

- First, everyone gets an AI human assistant that “helps them work better and faster.” I think many AI companies would proclaim we’re already here. I would disagree, but this is the closest to our current state.

- Why would I disagree? We still haven’t reached “AI Assistant” levels yet — people who heavily use AI have to spend lots of time building their AI assistants by combining lots of LLMs and tools into custom workflows. Also, the jury is still out on whether they actually help people “work better and faster.”

- Second, AI agents join your teams as “digital colleagues” and basically function as remote teammates (who happens to live in a datacenter). We’re a long way from this still, but there are companies pushing this already. Right now this is all marketing smoke and mirrors.

- Finally, humans will just act as managers of AI teams — they tell multiple AI agents what to do, the AIs go off and do them.

Let’s expand on what phase three up there entails. As they explain it:

As agents increasingly join the workforce, we’ll see the rise of the agent boss: someone who builds, delegates to and manages agents to amplify their impact and take control of their career in the age of AI. From the boardroom to the frontline, every worker will need to think like the CEO of an agent-powered startup. In fact, leaders expect their teams will be training (41%) and managing (36%) agents within five years.

There’s only one problem with this model for the future of employment. Think of the many roles in your company right now. What do you think is easiest to do by an AI? If you said “The job of a middle manager” then you are correct. Or, as Anthropic put it after they put an AI in charge of running a vending machine:

… we think this experiment suggests that AI middle-managers are plausibly on the horizon. That’s because, although Claudius didn’t perform particularly well, we think that many of its failures could likely be fixed or ameliorated: improved “scaffolding” (additional tools and training like we mentioned above) is a straightforward path by which Claudius-like agents could be more successful. General improvements to model intelligence and long-context performance—both of which are improving rapidly across all major AI models—are another. It’s worth remembering that the AI won’t have to be perfect to be adopted; it will just have to be competitive with human performance at a lower cost in some cases.

That last sentence is the important one. Anthropic is saying “for AI to replace managers it doesn’t have to be perfect, it just has to be much cheaper than your typical middle manager.”

So Microsoft’s model is somewhat deceptive. Surely they’re aware of the capacity of AI to potentially replace middle managers, which means their third phase isn’t people managing teams of AIs. It’s AIs managing teams of other AIs. So where are the people?

As a reminder, Microsoft and Anthropic are both heavily invested in selling AI. In fact, they’re so invested that I wouldn’t trust their projection of the future.

So how should we try and use AI? What future are we working towards? And how can we get there?

A more humane model

Let’s take Microsoft’s “Phase III” at face value, a human being leading a team of AI agents, and skip the implied “AIs just doing everything” for now.

The problem with Microsoft’s “Phase III” is that it inverts the relationship between AI and person. Microsoft says “AI will do all the valuable stuff, and you’ll just babysit them.” But that’s not actually what AI is particularly good at — and it’s definitely not why you hire people.

You hire people because they have unique, valuable skills. Here’s an example. Let’s talk about customer support — especially since that’s an area AI is threatening to disrupt (maybe even replace entirely).

Think about your own experiences with customer support. I imagine the incredibly infuriating experiences were ones where someone hopped on, they read the call script, they said “Thank your for your patience, sir” in exactly the same tone they would use while reading the phone book. Maybe you bounced from agent to agent, feeling like the ball in a game of keep away. In the end, you vowed to never do business with that company again.

Now think of the good ones. You got to a real person right away. They listened to your complaint. They said “That sounds so frustrating” in a way that made you feel like they actually meant it. Did they get the issue resolved faster than the other guys? Maybe, maybe not. Either way, you still left the call feeling a warmth for the person, and the company. You probably left a review saying “give this agent a raise!” You are probably a repeat customer.

The unique value that the best call center agent bring is their empathy. The ability to listen, to understand, and to make the other person feel heard and understood. That just happens to be a value that AIs are uniquely ill-suited to replicate. Even if they can do the mechanical things required (allow the other person to speak, restate their problem, express sympathy, all in a concerned tone of voice) the person on the other end of the phone ultimately knows they are talking to a machine that is incapable of understanding.

If you look at the ultimate goal of customer service as “get rid of the end user as quickly and cheaply as possible” than sure, an AI can do that. They can point you to a self service article and then hang up on you. And they can do that 100,000 times for pennies.

But the ultimate goal of customer service isn’t to get rid of someone. It’s to make sure someone continues being a customer when they experience an issue.

(this assumes they have other options — and this is why cable companies have notoriously poor customer service — because they are operating as de facto monopolies. Their goal actually IS get rid of the end user as quickly and cheaply as possible, because they don’t have to worry about the end user going elsewhere)

Looking at customer service through the lens of retention, it’s obvious why a human being is preferable to an AI. Because they have empathy. Their ability to connect on a human level is the most important thing they do. THAT is why you hire them.

How could an AI help someone be a better customer service agent?

Customer service agents usually have to do a few things:

- They have to answer the phone

- They have to take the person’s information (and look them up in a database of some kind if they’re already customers)

- They have to communicate about the problem

- They have to understand what the problem is

- They have to provide a solution of some kind, or direct the user to someone who can

- They have to document all this in a ticketing system

It’s like that for most calls. Now I would like you to imagine a call. The agent picks up, they greet the user and get their information. An AI agent is listening to the call, it automatically pulls up the user information without the agent even needing to touch the keyboard.

The agent continues to communicate with the user. Maybe their internet is slow today. The agent automatically pulls up a knowledgebase article about how to diagnose the problem. Maybe it even automatically kicks off a line test while the agent is talking to the user.

Throughout the entire process, the AI agent is transcribing what is happening in a ticket.

The person can focus on the caller and their problem — they can focus on empathizing. The problem gets solved quicker. At the end the agent hangs up the phone, and instead of taking time updating the ticket they review the summary, maybe correct one or two things, and then close the ticket.

It is, again, an inversion of how Microsoft envisions the future. They see a workforce of managers keeping an eye an AIs doing valuable work. What I see is people who are freed to focus on the most valuable thing they bring to their job, whatever that may be, surrounded by an array of AI agents who are giving them the space to do what only they can do. It would look something like this:

For a call center agent, then valuable thing is communicating and empathizing with customers. For a programmer it might be solving a particularly tricky problem that hasn’t been encountered before. For a cyber security agent it might be providing in-person trainings on a team-by-team basis as opposed to spending all day looking through alerts in a queue.

Or for a manager, like me, I would spend my time connecting with my team members, helping them improve their skills, writing long-term strategies and finding better ways to secure our organization. And AI would answer my emails from vendors, respond to chats about things that I already answered somewhere else, spend time pulling together resources for me to use, and more.

It would take away the stuff that isn’t uniquely valuable, so I can focus on what I do that is most important (shoutout to Essentialism).

It doesn’t have to be how they pitch it

Again, don’t listen to sales! A while back this tweet went viral:

And this humane AI strategy is how you implement it (at work, at least). Focus on doing what you do well, and let AI handle the busywork. That’s the only way AI isn’t going to become a net negative on society. Don’t let them convince you otherwise.